M1stLTS3D

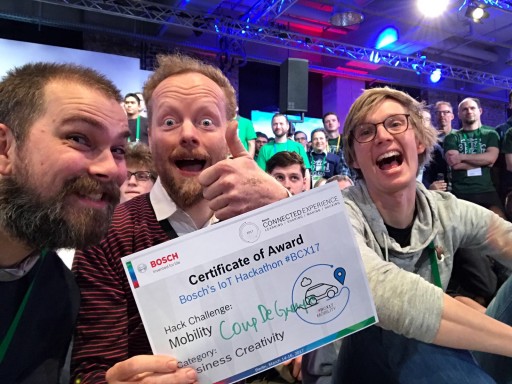

Hoog+Diep wins Kinect Hackathon Amsterdam!

Hoog+Diep has won the first prize at the Kinect for Windows Hackathon Amsterdam! During an inspiring 24 hours we imagined and designed the concept, built a first experiential prototype and presented “My first little toy story 3D”:

Hoog+Diep presents “My first little toy story 3D” at the Kinect v2 Hackathon Amsterdam

Kinect v2 hackathon

Having worked with the Kinect before we knew about using its depth, video and audio sensors and its SDK features like skeleton tracking. But early this year Microsoft announced the Kinect v2 with improvements on all these features plus some new ones. This hackathon promised to be a great way to get to know the new possibilities with the engineers from the Kinect team coming over from Redmond and bringing with them enough hardware for us and our fellow inventors to play with.

Ben Lower of Microsoft showing the Kinect v2 capabilities

Ideating

The hackathon started with an overview of the Kinect’s capabilities to a packed Pakhuis de Zwijger, after which we started brainstorming ideas. We were looking for playful ideas that combined the potential of the technology with inspiration from real human behaviour. After exploring ideas for virtual/physical games, learning to dance and playing with LEGO, inspiration for the concept came from kids playing out adventures with their toys. An important consideration when evaluating the ideas was whether we could prototype their essence in the remaining 20 hours (minus a few hours sleep).

Early sketch of “My first little toy story 3D”

Prototyping the essence of the experience

We iterated on the original idea and created a quick storyboard of the full experience kids could have with the concept. The storyboard tied potential ideas into one coherent concept, but also helped us to identify the essence of the experience yet feasible to prototype in the time left. It was clear to us the essence was about the magic moment of the player disappearing and the toys re-enacting the adventures on their own. After discussions on the technical details we felt confident we could create a prototype that allowed people to experience this: starting the recording, play with toys like you are used to, stop the recording and see your toys magically re-enact the adventures on their own. Our technical approach for creating this prototype was:

1. get toys

2. create virtual counterparts of the toys by scanning them with a Kinect

3. record the movements in 3D space of the tangible toys while a player is playing

4. replay the movements with the virtual toys

5. take a (3D or photograph) snapshot of the empty play area (empty means no player or toy in shot)

6. use the snapshot as the background for the replay

If we had time left we could work on:

– record sound while player is playing and use with replay video

– use voice to activate starting and stopping recording

– use certain sounds from audio recording to trigger special (video and audio) FX (a growling dino could for instance be replayed with a lowered voice and a screenshake)

– record two players playing and replay their adventures

Getting toys and creating virtual counterparts

After a trip to a local toy store we set about scanning the toys. In the concept this was part of the product but since it was not core to the experience we used an existing solution called Skanect. We first scanned the toys by moving the Kinect camera around our main characters.

Scanning toys attempt 1 – moving the Kinect camera

Not fully pleased with the results we opted for keeping the Kinect in one place and rotating the toys on an impromptu platform.

Scanning toys attempt 2 – rotating toys on platform and keeping Kinect in one place

Recording movements of the toys in 3 dimension and replaying them with virtual toys

We knew that the Kinect skeleton tracking could detect hand and thumb very precisely and started creating an application that could track and capture the hand of a user and replay its movement with a virtual blob (The toys were still being scanned at this moment.)

Coding and testing to capture the hand of a user and replay its movement with a virtual blob

Eureka moment – we knew we had something special

Tracking the hand worked well but unfortunately failed when the user was holding a relatively large object (such as the acquired toys). This was something we could have fixed if we were working on a project with more time, but in this hackathon setting it meant we had to switch to the less precise tracking of the under arm. When the underarm tracking worked and we had replaced the 3D blob with the scanned dino we had our eureka moment. The concept came alive and we knew we had something special.

The underarm tracking worked and the scanned dino replaced the 3D blob. Eureka! The concept came alive and we knew we had something special.

From rudimentary to experiential prototype

But it was still a very rudimentary prototype; the movements were jerky, it worked with only one hand, the user did not magically disappear yet and it missed the look and feel of a proper application. So the next hours were spend adding the magic disappearance, overall polishing but most importantly improving the code for tracking the toy movements for both hands.

First walkthrough

We made some real progress and before getting a few hours sleep we did a first experiential prototype walkthrough. This involved finding the best camera position to record the adventures.

The first walkthrough of our experiential prototype

Communicating the full experience

While the experiential prototype was in development we also iterated on the full experience with the concept. We updated the earlier storyboard and created a polished version that told the full story while leaving room for the prototype to show the most essential part.

Presentations

After a few hours sleep we returned to Pakhuis de Zwijger to prepare for the presentations. After some final polishing of the prototype and presentation we set up the prototype on a corner of the stage and sat down to enjoy the presentations and demo’s of all teams (see http://www.daretodifr.com/kinect-hackathon/). We saw some wonderful demos ranging from the scientific to the ridiculous.

We won!

After a relative short break the jury returned and announced the results. “The first place goes to “My first toy story 3D” by Hoog+Diep!

The winners with their presents

We had a great time and met lots of friendly and inspiring fellow inventors. If you want to see the other presentations take a look at http://www.daretodifr.com/kinect-hackathon/. Thanx again to Dare to Difr and the Microsoft Kinect Team for organising.

update Microsoft has a nice write up of this Kinect Amsterdam hackathon at http://blogs.msdn.com/b/kinectforwindows/archive/2014/09/19/hackers-display-their-creativity-at-amsterdam-hackathon.aspx